Artificial Intelligence is often described as the most transformative technology of our lifetime — but behind every chatbot, recommendation engine, or autonomous system lies something invisible yet powerful: energy.

According to a recent Goldman Sachs report, the global demand for electricity is set to surge 160% by 2030, primarily driven by AI data centers.

This isn’t just a tech story — it’s an energy revolution in disguise.

AI models, especially large-scale systems like ChatGPT, Gemini, and Claude, require massive computational horsepower to process and generate human-like responses.

Every time an AI model runs an inference (a prediction or output), it consumes energy. Multiply that by billions of queries a day, and the electricity usage quickly becomes staggering.

- A single AI data center can consume as much electricity as a medium-sized city.

- Training one large model can generate over 500 tons of CO₂ emissions, according to University of Massachusetts Amherst researchers.

In other words: AI doesn’t just think, it burns.

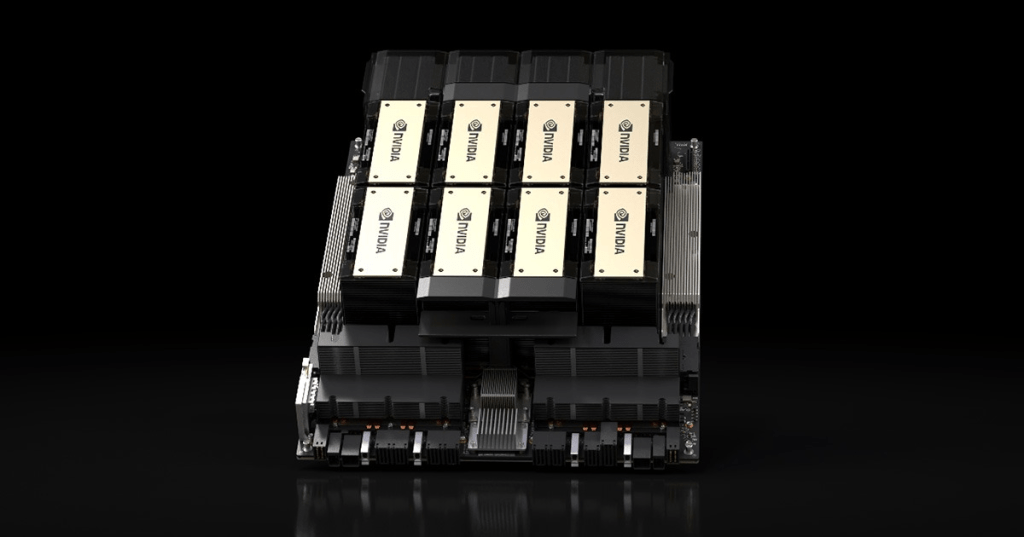

Traditionally, cloud data centers handled storage and web services. But the new wave of AI infrastructure like those run by NVIDIA, Google, Microsoft, and Amazon are compute-heavy.

These centers need:

- Constant cooling systems

- High-bandwidth fiber optics

- Specialized chips (GPUs and TPUs)

- Reliable, uninterrupted electricity

This is pushing companies to rethink energy sourcing from renewables to nuclear microgrids.

AI’s growth relies on three interdependent pillars:

- Data – The fuel for machine learning

- Chips -The engines (GPUs, TPUs)

- Energy – The lifeblood of computation

When one of these falters, the entire ecosystem slows. And right now, energy is the next bottleneck.

Even NVIDIA’s CEO Jensen Huang admitted that sustainable AI growth will depend on how efficiently companies can power and cool their chips.

Microsoft recently hired nuclear energy experts to explore small modular reactors (SMRs) for future AI campuses.

Google has partnered with Fervo Energy to power its Nevada data center using next-gen geothermal energy, providing stable, renewable electricity 24/7.

Amazon Web Services (AWS) continues to expand its wind and solar portfolio, already the world’s largest corporate buyer of renewable energy.

Goldman Sachs’ analysis warns that by 2030, AI will contribute to nearly 8% of global power demand, compared to just 2% today.

Countries rich in renewable capacity like India, the U.S., and the UAE could become AI energy hubs, hosting new types of data centers designed for efficiency.

This means energy policy and AI strategy are now inseparable.

Governments and corporations alike will need to balance progress and sustainability.

When NVIDIA introduced its Blackwell Ultra GPU in 2025, it promised 3x faster AI performance with 40% lower power use.

This innovation directly addresses the looming energy crisis proving that AI progress must evolve with power efficiency, not against it.

Companies that follow this path will not only save costs but also align with ESG goals, investor expectations, and regulatory frameworks.

The AI revolution won’t be won by algorithms alone, it will be won by those who can power intelligence responsibly.

The 160% surge in power demand forecast by Goldman Sachs is a wake-up call.

It’s not just about more data centres, it’s about smarter, greener ones.

“The next trillion-dollar opportunity isn’t AI itself, it’s powering it.”

As AI continues to grow, so does its hunger for power.

But this challenge also opens doors for innovation from AI-optimized chips to clean-energy data hubs.

If the last decade was about cloud computing, the next one will be about energy intelligence building systems that are not just smart, but sustainable.

Because in the race to power the world’s smartest machines, the real question isn’t how fast AI can think, it’s how responsibly it can run.

Leave a comment