Introduction: A Breakthrough That Could Redefine AI Hardware

A major milestone in computing has arrived. Researchers have demonstrated that photonic processors, chips that use light instead of electricity, can outperform traditional GPUs in select AI inference tasks.

This breakthrough signals a potential shift in the future of artificial intelligence, especially for companies seeking faster, cooler, and more energy-efficient hardware.

The AI world has been waiting for this moment, and now it’s here.

Headline Recap

- Photonic AI chips show superior performance over GPUs in certain inference tasks.

- Breakthrough proves light-based computation can handle complex neural networks.

- Dramatic reductions in energy consumption and heat output.

- Marks a turning point for the next generation of AI hardware.

Simplified Explanation of the Tech

Traditional processors (CPUs and GPUs) move electrons through silicon circuits. Photonic processors use photons, particles of light, instead.

Why this matters:

- Light moves faster than electricity.

- It generates almost no heat.

- It enables massively parallel computation.

Think of photonic chips as AI accelerators that process data at the speed of light, making them incredibly fast for certain workloads like matrix multiplication and transformer-based inference.

Deeper Analysis

1. The Speed Advantage

Photonic processors excel at matrix-heavy AI tasks, especially large language model inference, because optical pathways handle parallel operations naturally.

This allows photonic chips to deliver lower latency, even under heavy loads.

2. Energy Efficiency Is the Real Game-Changer

GPUs consume enormous power and require cooling systems. Photonic chips use a fraction of that energy, making them ideal for:

- Data centers

- Edge devices

- Autonomous systems

- High-frequency computing environments

This could cut AI power consumption costs dramatically.

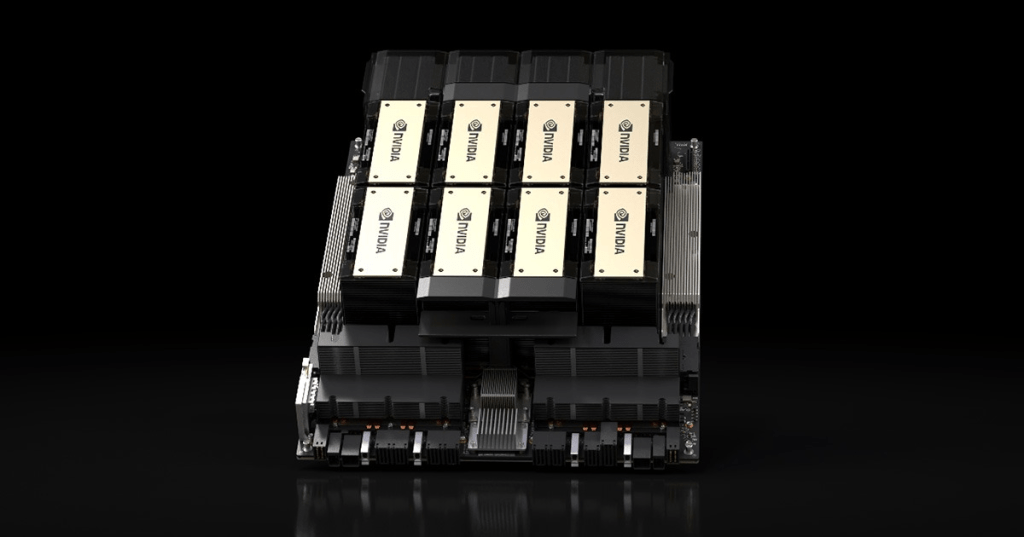

3. Not a GPU Replacement, Yet

Photonic processors are powerful but specialized.

They shine in inference, not training.

So the future may be hybrid systems combining GPUs + photonics.

4. Surge of Interest From Big Tech & Startups

Companies like Lightmatter, Lightelligence, and startup labs are pushing commercialization.

Cloud providers and chip manufacturers are exploring partnerships to bring photonics to scale.

Market & Industry Impact

Short-Term Impact

- Chip startups attract major investor interest.

- Data centers begin testing photonic inference modules.

- Demand increases for hybrid compute architectures.

Long-Term Impact

- Photonic AI could become the dominant hardware for inference workloads.

- Energy-efficient AI systems reshape global cloud economics.

- Governments support photonic research for national tech advantages.

- AI hardware competition extends beyond NVIDIA and AMD.

Global Relevance

This breakthrough affects multiple sectors worldwide:

- AI research: Faster, cheaper inference enables new model designs.

- Cloud computing: Massive reductions in operational costs.

- Sustainability: Lower emissions from energy-hungry data centers.

- Manufacturing: Push for new photonic fab capabilities outside traditional semiconductor plants.

As AI demand explodes globally, photonics may become the key to scaling it sustainably.

Conclusion: The Dawn of a New Compute Era

The proof is clear, photonic processors aren’t just experimental anymore.

They’re outperforming GPUs in real AI workloads and opening a pathway to ultra-efficient, ultra-fast AI systems.

While GPUs will remain essential, photonics is emerging as the most promising technology to meet the energy and performance demands of the next decade.

The future of AI might be powered not by electrons, but by light.

Leave a comment