Artificial Intelligence is accelerating progress across almost every industry, but with this rapid growth comes an equally rapid rise in risks. As Gartner highlights, AI is not only a catalyst for innovation; it also introduces new concerns around trust, transparency, privacy, bias, and uncontrolled autonomy.

In parallel, Capgemini warns that AI is becoming both a powerful security tool and a high-value target for cyberattacks.

In 2025 and beyond, success with AI is no longer just about capability.

It’s about safety, governance, resilience, and ethics.

Organizations that fail to embed trust and cybersecurity into their AI systems risk not only data breaches, but also reputational damage, legal consequences, and systemic failures.

AI Enables Progress, But Also Introduces New Risks

As AI becomes integrated into decision-making, automation, and mission-critical systems, new categories of risk emerge:

1. Bias & Fairness Issues

Models may inherit or amplify unfair patterns in data, creating discriminatory outcomes in areas like hiring, lending, or healthcare.

2. Privacy & Data Misuse

AI systems often require vast amounts of data, making data protection and compliance more complex.

3. Autonomy Without Human Oversight

Agentic or highly autonomous systems may make decisions that are hard to trace or explain.

4. Lack of Explainability

Most enterprises still struggle with understanding why AI makes certain decisions, a major barrier for high-stakes sectors.

Gartner notes that trust, ethics, and governance are no longer optional, they are now core to AI strategy.

Cybersecurity: AI is Both Weapon and Weak Point

Capgemini emphasizes that in today’s threat landscape, AI has a dual identity:

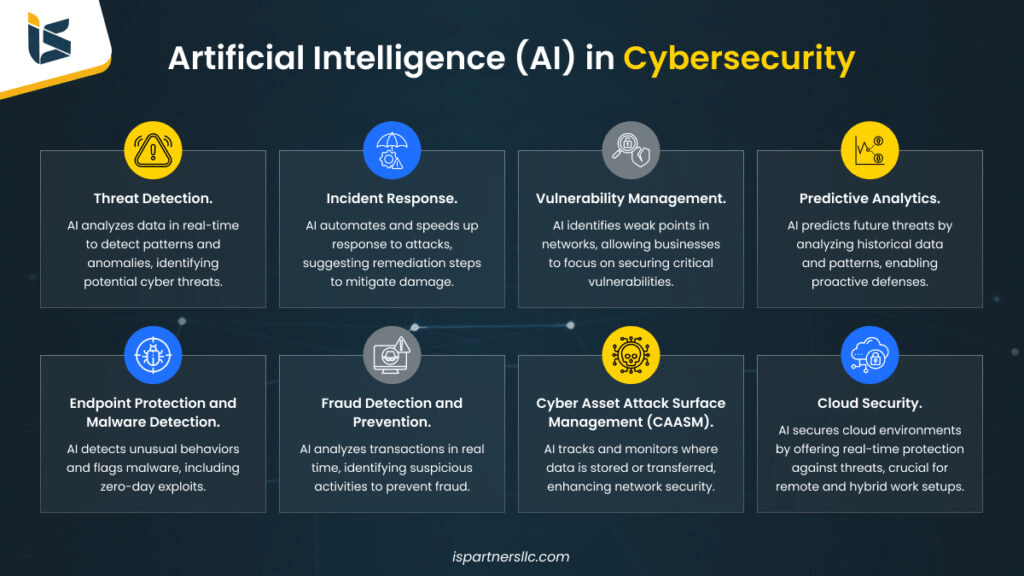

AI as a Tool for Security

AI can:

- detect anomalies in real time

- identify malicious behavior before humans can

- scan massive transaction logs instantly

- automate threat response

- protect cloud systems, networks, and endpoints

This makes AI a frontline defense technology for enterprises.

AI as a Target for Attack

But the same systems can be exploited:

1. Adversarial Attacks

Tiny, almost invisible input manipulations cause models to misclassify images or data, a huge problem for security, healthcare, and autonomous vehicles.

2. Data Poisoning

Attackers intentionally corrupt training data to produce harmful model behavior.

3. Model Theft & IP Risks

Attackers reverse-engineer or steal models trained with millions in R&D.

4. Prompt Injection & Jailbreak Attacks

GenAI systems can be manipulated into revealing sensitive data or bypassing safety constraints.

5. Deepfakes & Synthetic Media

Used for phishing, reputational harm, fraud, and political manipulation.

This creates a new category: AI Security (AISec), defending AI itself from attacks.

The Push for Safe, Governed, Responsible AI

Organizations are now prioritizing:

1. Safe AI

Systems designed with guardrails, fallback modes, and risk detection.

2. Explainable AI (XAI)

Making decisions transparent, traceable, and understandable to humans.

3. Responsible AI

Ethical standards around fairness, accountability, and human oversight.

4. Governance Frameworks

Clear policies for:

- model usage

- data handling

- approvals

- audits

- compliance

- transparency

5. Regulatory Alignment

Countries are introducing new AI laws (EU AI Act, U.S. executive orders, India’s evolving guidelines).

Governance isn’t slowing AI down, it’s enabling sustainable, long-term adoption.

Why This Matters for Tech Professionals & Businesses

If you’re involved in AI or digital transformation, you must understand:

- Trust is a competitive advantage

- Ethics is brand protection

- Cybersecurity is operational survival

- Governance is non-negotiable

- Safe AI is necessary for scale

AI maturity is no longer about capability, it’s about responsibility.

Organizations that build trust-first, secure-by-design AI systems will lead the future.

Those who ignore governance will face rising risks.

Conclusion: The Next Phase of AI Is Trust-Centered

AI’s potential is enormous, but so are the risks.

The future belongs to companies that don’t just deploy AI…

but deploy safe, secure, transparent, and ethical AI.

Cybersecurity, governance, and trust are no longer checkboxes,

they are the foundation of scalable, sustainable AI.

Leave a comment