Artificial Intelligence is evolving far beyond text and chat interfaces. The next major wave, already underway, is multimodal, embedded, and physical AI.

This transformation means AI will no longer live only inside screens or clouds. It will live in devices, machines, sensors, robots, vehicles, factories, and physical environments.

Reports from Google Cloud, Capgemini, and industry educators like Simplilearn show that enterprises are rapidly moving toward multimodal and on-device AI systems. Combined with robotics, this marks a new era where digital intelligence shapes the real world.

What Is Multimodal AI?

For years, AI systems were dominated by text, chatbots, language models, search agents.

But now, AI understands images, sound, video, sensor data, spatial maps, and even physical actions.

Multimodal AI combines:

- Vision (cameras, image recognition)

- Audio (speech, environmental sound)

- Sensors (temperature, motion, pressure, LiDAR, biometrics)

- Text & language

- Spatial understanding

This makes AI capable of perceiving the world more like humans do.

Examples include:

- AI that reads X-rays + listens to breathing patterns + analyzes blood data

- Cars that see lanes, hear alarms, detect obstacles via sensors

- Supply chains where cameras + RFID + predictive analytics work together

- Smart homes understanding gestures + voice + movement

Multimodal intelligence is the foundation for intelligent automation across industries.

Embedded AI: Intelligence Moving On-Device

A major shift is happening:

AI is running on-device instead of relying on the cloud.

Why this matters:

1. Faster decisions

Embedded AI removes latency, perfect for safety-critical environments.

2. More privacy

Data stays on the device, reducing compliance risks.

3. Lower costs

Less cloud usage = lower operational costs.

4. Works offline

This is huge for healthcare, industrial environments, defense, and remote locations.

Capgemini and Google Cloud report that edge AI is becoming a strategic priority in 2025 and beyond.

Examples of embedded/edge AI:

- Smart cameras that analyze footage locally

- Industrial sensors predicting equipment failures

- Wearables tracking health in real time

- Drones making autonomous navigation decisions

- On-device AI chips in smartphones and appliances

Embedded AI is the step that moves intelligence close to the physical world.

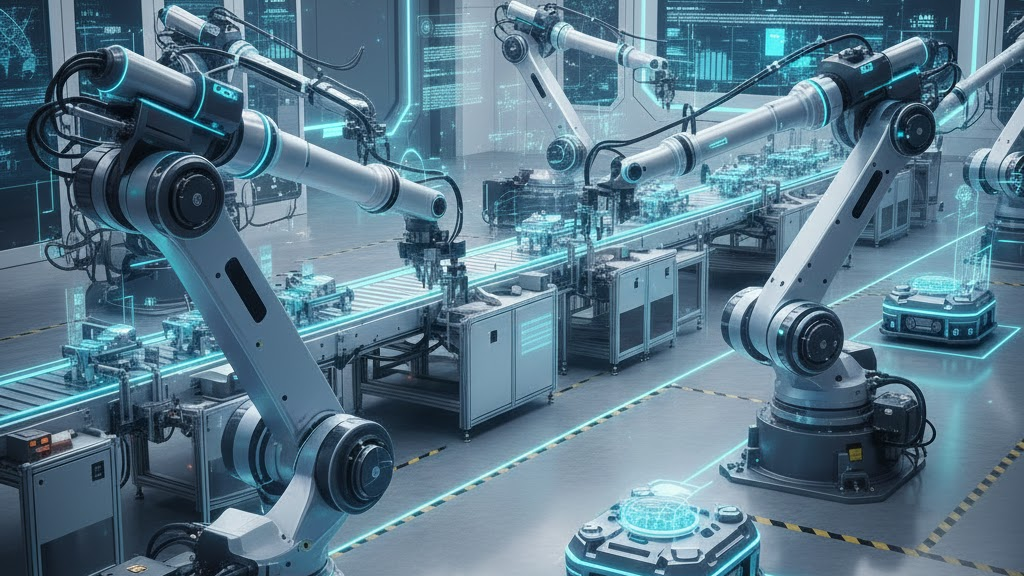

Physical AI & Robotics: When AI Meets the Real World

Physical AI refers to robots, autonomous machines, and intelligent devices that can perceive, decide, and act.

This includes:

- Industrial robots

- AI-powered logistics arms

- Autonomous vehicles

- Healthcare robots

- Warehouse automation bots

- Field robotics for construction, mining, agriculture

- Smart medical devices and surgical assistants

Advancements in chips, sensors, mobility, and control systems are accelerating the rise of Physical AI.

According to Simplilearn, this is one of the biggest shifts in the AI industry, AI leaving the virtual world and entering the physical one.

Why This Trend Is Exploding Right Now

1. Enterprise Need for Automation

Manufacturing, healthcare, logistics, all facing labor shortages and cost pressures, are turning to physical automation.

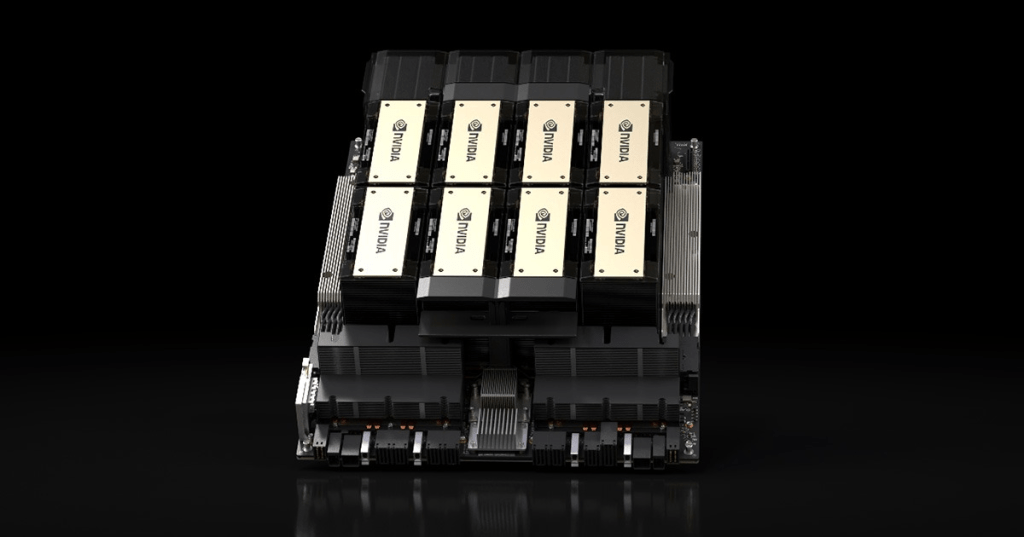

2. Edge Computing Is Maturing

Smaller AI chips with high compute power are enabling local intelligence.

3. Multimodal Models Are Getting Better

Models can now understand images, videos, sounds, and physical instructions.

4. Robotics Is Becoming Cheaper and More Flexible

More companies can deploy robots for daily operations.

The convergence of these forces is accelerating adoption at record speed.

Where We Will See the Biggest Impact

Manufacturing

AI-driven robotic arms, defect detection, predictive maintenance, and autonomous quality control.

Logistics & Warehousing

Drones, automated forklifts, sorting robots, real-time tracking through multimodal sensors.

Healthcare

AI medical devices, bedside robots, remote monitoring tools, and real-time diagnostics using multimodal data.

Consumer Tech

Smart appliances, home robots, wearables, all running on-device AI.

Mobility

AI-powered navigation, autonomous driving systems, safety sensors, and roadside predictive alerts.

The Bigger Picture: AI That Lives Everywhere

This is not just an upgrade, it’s a shift toward ambient intelligence.

AI will exist:

In walls

In machines

In vehicles

In devices

In sensors

In robots

In the environment around us

Multimodal + embedded + physical AI is the foundation for:

- Smart factories

- Smart hospitals

- Smart homes

- Smart cities

- Smart vehicles

- Smart everything

Conclusion: The Next Era of AI Is Tangible, Real, and Everywhere

AI is no longer just a digital assistant.

It is becoming a physical force, interpreting environments, making decisions, and taking action.

Multimodal systems give AI perception.

Embedded systems give AI speed and privacy.

Physical AI gives AI presence.

Together, these trends will redefine industries and everyday life across the world.

Leave a comment