The global race to build the most powerful AI models is shifting from software innovation to hardware dominance and Nvidia, long considered the king of AI chips, is beginning to feel the pressure.

According to The Times of India, major tech giants like Google and reportedly Meta Platforms, are accelerating efforts to create their own AI accelerator chips. This move signals a strategic shift that could reshape the multibillion-dollar AI hardware market.

For years, Nvidia’s GPUs have been the gold standard for training and deploying large-scale AI systems. But the rise of in-house chip development is opening a new chapter in the AI ecosystem, one defined by competition, control, and supply-chain power.

Tech Giants Want Control, And Custom Chips Offer It

Companies like Google and Meta aren’t just exploring their own hardware out of curiosity. They want:

More efficiency

AI workloads are exploding in complexity, and custom chips can be optimized for internal models and data centers.

Lower costs

Nvidia GPUs are expensive and often in short supply. Owning the chip pipeline means reduced dependency.

Faster scaling

Cloud providers can build hardware that aligns perfectly with their AI roadmap.

Energy optimization

AI models consume enormous amounts of power. Purpose-built chips reduce energy strain on data centers.

This means the AI hardware landscape is moving from one dominant supplier to a multi-player battlefield.

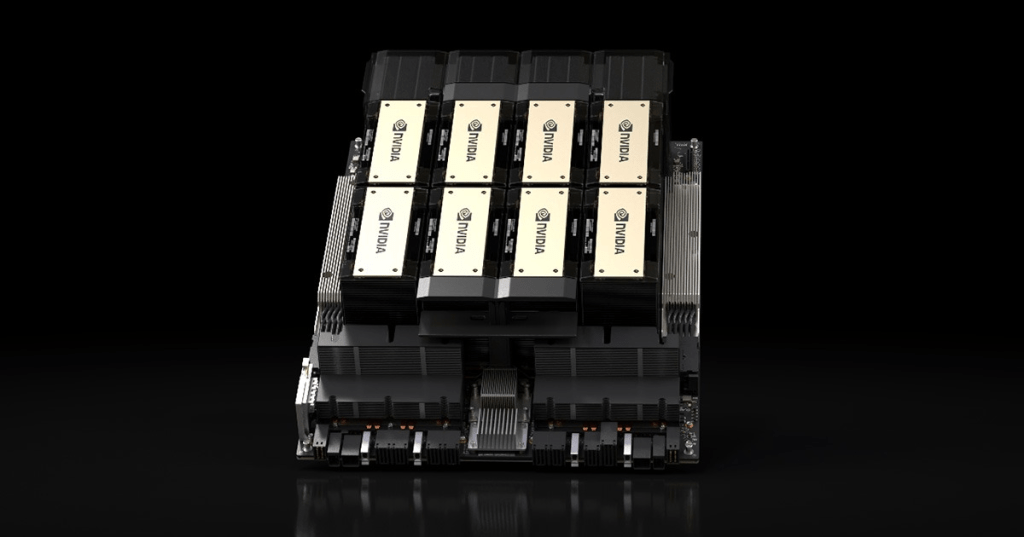

Nvidia Says It’s Still “A Generation Ahead”, But Investors Are Nervous

Nvidia insists that its GPUs remain one full generation ahead of competitors.

And in many ways, that’s true:

- Exceptional performance

- Strong developer ecosystem

- Industry-wide adoption

- CUDA dominance

Yet, despite this confidence, markets reacted sharply to reports of increasing in-house chip development. Investor sentiment dipped as analysts questioned whether Nvidia’s long-term dominance is at risk.

Why the concern?

- If major clients build their own chips, Nvidia could lose massive revenue streams.

- Competition will drive prices down, narrowing margins.

- Hyperscalers scaling internal hardware could reshape the supply chain.

The chip war is no longer theoretical, it’s happening now.

The New Frontier of AI: Hardware, Supply Chains & Energy

The rapid expansion of AI isn’t just about algorithms anymore.

The bottlenecks, and opportunities, now lie in:

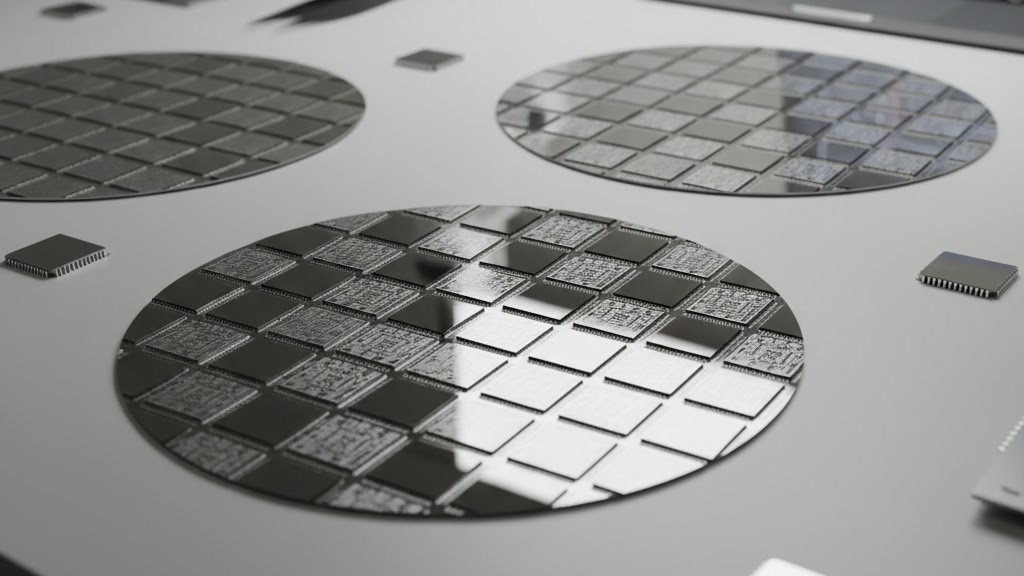

Hardware design

Custom accelerators, AI-specific architectures, low-power NPUs.

Supply chain control

Securing manufacturing capacity at TSMC, Samsung, Intel Foundry.

Energy consumption

Data centers must handle massive thermal and power loads.

Infrastructure dependency

Countries view chip manufacturing as national security priority.

We are entering an era where chips, data centers, and energy grids matter as much as research labs and software code.

What This Means for the Future of AI

1. Big Tech Will Push Toward Vertical Integration

From hardware → cloud → models → applications.

2. Competition Will Spark Faster Innovation

Better chips, more efficiency, more experimentation.

3. Countries Will Prioritize Chip Independence

The AI race is geopolitical, not just technological.

4. Nvidia Will Need To Innovate Even Faster

Leadership is not guaranteed, especially in a market this dynamic.

Conclusion

The AI chip war is accelerating, and the balance of power is shifting.

Nvidia remains a giant, but giants can be challenged, especially when the challengers are companies like Google and Meta with deep pockets, massive datasets, and global infrastructure.

The future of AI now depends as much on hardware precision as on model intelligence.

The real battle is no longer happening in code, it’s happening in silicon.

Leave a comment