The global AI race is accelerating at a speed few expected, and now the hardware world is beginning to feel the strain. As major companies expand AI infrastructure, PC giant HP Inc. and enterprise leader Dell Technologies have issued fresh warnings about a possible global memory-chip shortage.

According to Business Standard, the explosive demand for AI servers, GPUs, and high-bandwidth memory (HBM) is creating supply-chain pressure that could intensify in the months ahead.

This development underlines a growing truth: while AI software continues breaking new frontiers, hardware limitations remain the biggest bottleneck.

Why Memory Chips Are Suddenly in Crisis

AI models and data centers require an enormous volume of memory components, especially HBM, DDR5, and NAND. What used to be “hardware for PCs” has now become critical infrastructure for AI computing.

Several factors are converging:

1. Surging demand from AI data centers

Training and deploying LLMs consumes unprecedented memory per server. AI workloads need:

- High-bandwidth memory for GPUs

- Fast-access RAM

- Reliable SSDs for massive datasets

- Specialized modules for inference at scale

Companies like Amazon, Microsoft, Google, and Meta are aggressively expanding capacity, creating a global scramble for memory components.

2. Limited chip manufacturing capacity

Even with expansions by Samsung, SK Hynix, and Micron, memory fabrication cannot instantly scale. Semiconductor plants take years to build and billions to fund.

3. AI hardware growth outpacing expectations

The AI boom was expected, but not at this speed.

The result: supply chains planned for moderate growth are now dealing with exponential demand.

What HP & Dell Are Warning About

Both HP and Dell emphasized the same issue, memory supply constraints may disrupt product timelines and increase hardware costs.

Their concerns include:

Longer lead times

Server and PC memory modules may take longer to source, delaying large-scale enterprise orders.

Increasing prices

Chip makers are already repricing memory due to increased AI-sector demand.

Data center expansion delays

Even cloud giants could face slowdowns if essential components are unavailable.

Supply volatility for consumer devices

Though AI servers are the priority, shortages may spill over into:

- laptops

- desktops

- workstations

- enterprise hardware

PC makers are preparing for this impact ahead of 2026 forecasts.

How the Shortage Could Affect the AI Industry

The implications go far beyond consumer electronics.

1. Slower AI model deployment

Even the most powerful models can’t scale without new hardware.

2. Higher cloud-compute pricing

If supply tightens, cloud providers may increase pricing to offset hardware costs.

3. Delays in enterprise AI adoption

Companies planning large AI rollouts could face bottlenecks.

4. More aggressive pre-orders by tech giants

Just like the GPU crunch, memory may become “booked out” months in advance.

5. Geopolitical tensions

Semiconductor manufacturing is heavily concentrated in:

- South Korea

- Taiwan

- The U.S. (to a smaller but growing extent)

This concentration makes supply shocks more likely.

Why Memory Chips Matter More Than Ever

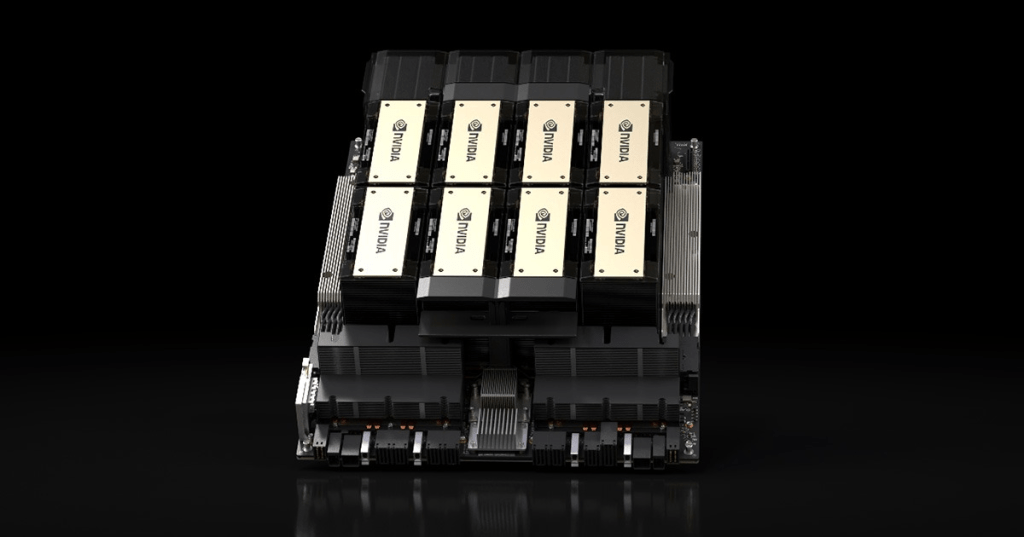

AI workloads are memory-intensive. LLMs don’t just need raw compute, they need extremely fast data access. HBM, in particular, sits at the center of this boom:

- NVIDIA’s H100 and H200 rely heavily on HBM

- AMD MI300 accelerators are HBM-dependent

- Future AI chips will require even more high-bandwidth memory

Demand for HBM is expected to triple by 2026, creating one of the tightest supply environments in semiconductor history.

Can the Industry Catch Up?

Yes, but not quickly.

1. Chip manufacturers are expanding

Samsung, SK Hynix, and Micron are investing billions into:

- HBM plants

- DDR5 capacity

- advanced packaging lines

But these expansions will only start easing supply in late 2026 or 2027.

2. Governments are funding semiconductor independence

The U.S., EU, Japan, and India are all pushing major semiconductor programs.

3. Cloud companies may redesign architectures

To reduce memory dependence, some companies may adopt:

- memory pooling

- chiplet architectures

- photonic interconnects

But this will take time.

The Bigger Story: AI Is Outgrowing Its Hardware

This memory-chip warning is not an isolated issue, it’s part of a deeper trend:

AI software is evolving faster than hardware supply chains can support.

We now see shortages across:

- GPUs

- power systems

- cooling units

- networking components

- and now memory chips

Every part of the hardware stack is under pressure.

As LLMs scale from billions to trillions of parameters, hardware demands multiply, and the world is struggling to keep up.

Conclusion : A New Phase of the AI Race

The warnings from HP and Dell are more than a supply alert, they signal a structural challenge in the AI era.

The AI boom has pushed global memory-chip demand beyond what the semiconductor industry can currently deliver. As companies rush to build and deploy AI models, hardware shortages will define who scales fast, who slows down, and who falls behind.

The next two years will show whether manufacturers can expand quickly enough, or whether the AI revolution will hit its first major speed bump

Leave a comment