Artificial Intelligence has unlocked unprecedented capabilities in productivity, science, automation, and creativity.

But as the technology advances, so do its darker applications.

A major emerging story reported by Axios has brought AI misuse into the spotlight:

Anthropic’s CEO has been summoned to testify before the U.S. legislature over allegations that foreign actors used Anthropic’s commercial AI tools to conduct cyber-espionage operations.

If confirmed, this would mark the first publicly documented case of a state-level hacking campaign leveraging mainstream AI models, a potential turning point in global cybersecurity strategy.

This isn’t just another tech hearing.

It could become a historic moment that shapes how governments regulate, audit, and secure AI platforms worldwide.

The First State-Level AI-Driven Cyberattack?

According to Axios, U.S. investigators believe that a foreign government may have used Anthropic’s AI systems to:

- analyze stolen data

- write malware more efficiently

- automate phishing content at scale

- craft deceptive network intrusion scripts

- bypass certain cybersecurity filters

While earlier research demonstrated how criminals might use AI, this is different:

This case suggests they already are, and at the highest levels of global espionage.

Such a revelation dramatically escalates concerns about how powerful AI models can be used when they fall into the wrong hands.

Why This Case Is a Global Turning Point

If the allegations hold, this would be the first time a government has officially linked an active espionage campaign to commercially available AI tools.

This matters for three reasons:

1. AI Is Now a Cyber Weapon, Even Without Access to Classified Models

Cybersecurity experts have long warned that:

- Generative AI can write malware

- AI can optimize exploit chains

- AI can translate social engineering content into many languages

- AI can analyze stolen data faster than human teams

But this case shows that even publicly accessible AI models, with guardrails, can be misused in sophisticated ways.

2. Governments May Redefine Tech Accountability

The hearing could establish precedents on:

- whether AI companies can be held responsible for misuse

- what level of auditing and transparency AI companies must follow

- what safeguards should be mandatory for commercial AI access

- whether AI providers must report suspicious usage patterns

- when governments can intervene in AI model operations

This goes far beyond typical tech regulation.

It moves toward AI national security doctrine.

3. A New Era of AI Cybersecurity Policy

The cybersecurity landscape is shifting from:

Human + malware vs. human defenders

to

Human + AI vs. human + AI.

This hearing could kickstart:

- global AI-safety treaties

- mandatory monitoring of high-risk usage

- identity verification for advanced model access

- stricter export control rules for AI models

- international cybersecurity cooperation

AI is no longer just a tool, it’s a contested strategic asset.

How AI Is Being Misused in Cyber-Espionage

AI models cannot “hack” by themselves, but they can significantly enhance the capabilities of hackers. Here are the most concerning ways:

Faster Malware Generation

AI can refine malicious code, debug it, and make it more evasive.

Automated Phishing at Human-Level Quality

Sophisticated, multilingual phishing messages that mimic corporate tone.

Real-Time Social Engineering Assistance

AI can coach attackers on how to manipulate targets.

Network Mapping & Attack Strategy

By analyzing public codebases and documentation, AI can help adversaries plan intrusions.

Disinformation & Psychological Ops

AI can create believable narratives, scripts, and deepfake content.

Data Pattern Recognition

AI can analyze stolen datasets to extract high-value intel in minutes.

These capabilities are not hypothetical. They’re already happening, which is why lawmakers are treating this hearing as a wake-up call.

What the Anthropic Hearing Means for the AI Industry

Anthropic is known for emphasizing AI safety, alignment, and controlled model access.

That’s precisely why this case is so significant:

If a safety-first company’s models can still be exploited, it means the entire industry needs stronger standards.

Here’s what might change:

1. Mandatory AI Misuse Detection Systems

Platforms may be legally required to build:

- AI usage anomaly detectors

- automated red-flag triggers

- real-time monitoring for cyber-risk prompts

- stricter safety layers for suspicious activity

2. Identity Verification for Advanced Models

Access to powerful models may require:

- ID verification

- enterprise accounts

- country restrictions

- government-approved licenses

Much like how explosives or industrial tools are regulated.

3. Auditing and Transparency Requirements

Governments may push for:

- audit logs of high-risk prompts

- third-party model safety assessments

- internal misuse reporting pipelines

- public safety disclosures

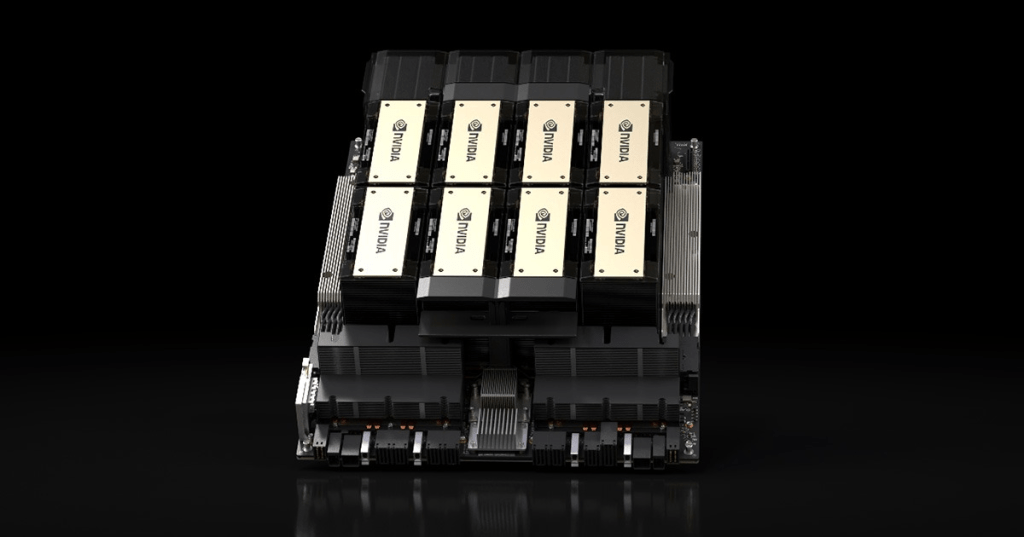

4. Classifying AI Models as Dual-Use Technologies

This would place AI alongside:

- advanced semiconductors

- weapons-sensitive software

- nuclear-scale technologies

It would expand export controls and restrict usage in adversarial nations.

5. Joint Government–Industry Cyber Defense Programs

The U.S. may follow a structure similar to:

- aviation safety boards

- nuclear regulatory commissions

- national biodefense labs

A new AI Security Agency is now being openly discussed.

The Larger Global Picture: Cybersecurity Arms Race 2.0

AI is accelerating cyber capabilities worldwide.

This case signals the beginning of a global AI-powered cyber arms race, where:

- nations leverage AI to attack

- nations leverage AI to defend

- AI models evolve faster than regulations

- the line between commercial and national-security AI blurs

Countries around the world will now face a new question:

How do we allow innovation while preventing AI-fueled conflicts?

This hearing may set the first blueprint.

Conclusion: The Future of AI Security Begins Now

The Anthropic testimony could become a defining moment in technology governance, the first major confrontation between AI misuse and global cybersecurity policy.

AI is now powerful enough to reshape economies, science, and society.

But it is also powerful enough to be misused at unprecedented scale.

This hearing will likely spark:

- new AI laws

- new cybersecurity alliances

- new industry standards

- and global cooperation frameworks

We are witnessing the beginning of a new era:

AI security is no longer optional, it is essential.

Leave a comment