A new wave of transformation is sweeping across the global tech ecosystem. Big-tech cloud and infrastructure providers especially Amazon Web Services (AWS) and Nvidia are ramping up investments, partnerships, and product roadmaps built specifically for next-generation AI workloads.

According to industry insights and Reuters reporting, these companies are accelerating development of hardware, chips, networking solutions, and cloud services capable of supporting:

- Larger foundation models (multi-trillion parameters)

- Real-time generative AI and inference

- Massive-scale data processing

- AI-powered automation and edge intelligence

- Complex multimodal workloads (text + video + speech + robotics)

This is not just an upgrade, it is the beginning of a foundational shift in global computing infrastructure. Cloud providers and semiconductor leaders are preparing for an AI-driven digital economy that will require exponentially more compute, storage, bandwidth, and power than ever before.

Why Cloud & Infrastructure Giants Are Investing Aggressively in AI

For companies like AWS, Microsoft, Google Cloud, and Nvidia, AI is no longer an “add-on” to existing services. It has become the core strategy.

There are five major reasons driving this acceleration.

1. AI Workloads Are Growing at an Explosive Pace

Generative AI has dramatically changed how organizations think about computing:

- AI training now requires massive GPU clusters

- AI inference is moving toward real-time response

- New multimodal models process text, images, speech, and video simultaneously

- Enterprises want custom AI models trained on proprietary data

The computational demands of AI are growing far beyond what traditional cloud infrastructure was designed for.

This is why AWS and Nvidia are heavily investing in:

- New GPU architectures

- Faster interconnects

- Larger-scale distributed computing

- AI-optimized storage systems

- High-bandwidth memory solutions

- AI-specific networking fabrics

2. AI Is the Future of Cloud Revenue Growth

Cloud growth is slowing in some enterprise segments, but AI demand is skyrocketing.

The next decade of cloud revenue according to analysts will come from:

- AI training clusters

- AI inference platforms

- Enterprise copilots

- Industry-specific AI services

- Edge-AI deployments in factories, hospitals, retail, and logistics

- Data-driven automation tools

Cloud giants cannot afford to fall behind in this race.

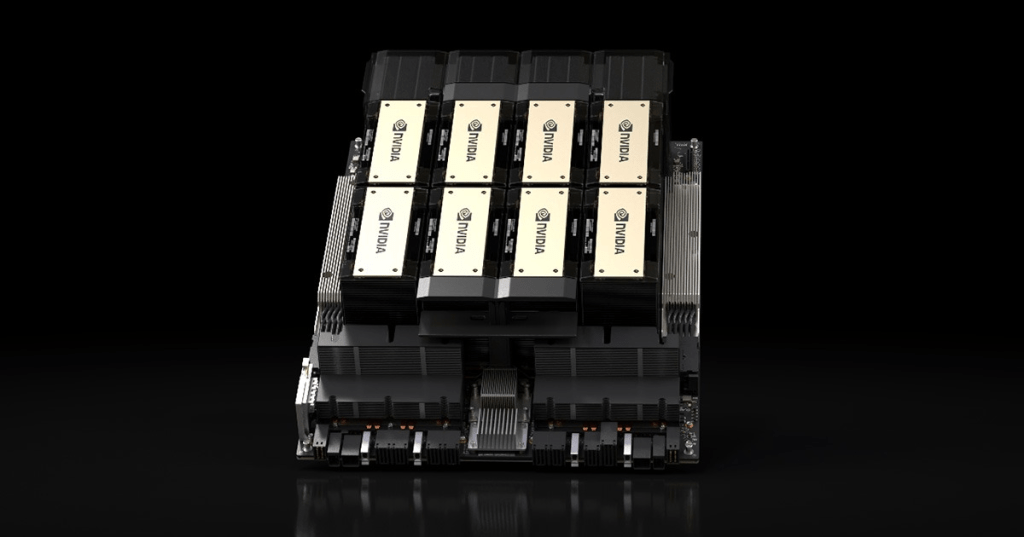

3. Hardware Is Becoming Strategic Again

For years, software was seen as the primary driver of innovation. But AI has brought hardware back to center stage.

Modern AI requires:

- High-density GPUs

- Purpose-built accelerators

- AI-optimized CPUs

- Ultra-fast networking (NVLink, InfiniBand)

- Liquid cooling

- AI-specific memory architectures

This is why Nvidia the world’s leading AI chip maker is now deeply tied to cloud providers.

AWS + Nvidia: A Strategic Alliance

AWS is building entire AI server families around Nvidia chips, especially:

- H200 GPUs

- Blackwell architecture

- Grace Hopper Superchips

This allows AWS to position itself as the world’s biggest AI supercloud.

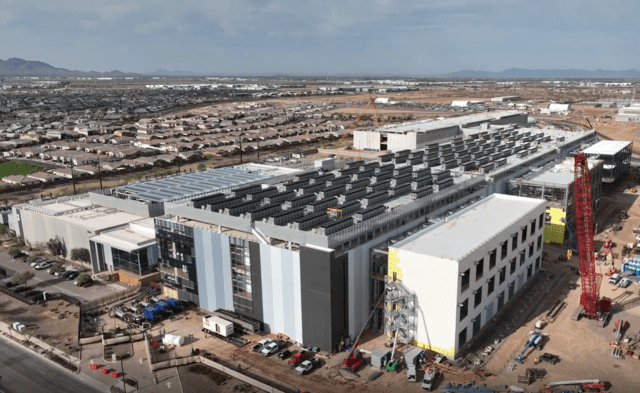

4. AI Infrastructure Is Becoming the New Global Utility

Just as the world once built highways, power grids, and telecom networks, today we are witnessing:

The construction of global AI infrastructure.

This includes:

- AI supercomputers

- Hyperscale GPU clusters

- Renewable-powered data centers

- Fiber-optic connectivity for high-bandwidth AI workloads

- Edge-compute nodes for real-time AI processing

Companies that build this infrastructure will lead the next generation of global technology.

5. Competition Among Big Tech Is Intensifying

The AI race is no longer just about innovation, it’s about market domination.

- Microsoft has multi-billion-dollar AI investments, including OpenAI integrations

- Google Cloud is pushing hard with TPU-based AI services

- AWS is rapidly scaling its AI platform with Trainium, Inferentia, and Nvidia systems

- Nvidia is expanding deeper into cloud partnerships and design tools

- Meta is building its own AI supercomputers (e.g., MTIA accelerators)

This competition forces every company to move faster.

Next-Gen AI Workloads Are Redefining What Infrastructure Must Deliver

AI is not like traditional computing. New workloads require new capabilities.

1. Multi-Trillion-Parameter Models

Next-gen foundation models may reach 5 to 10 trillion parameters. Training them requires:

- Tens of thousands of GPUs

- Fast distributed compute

- Low latency node-to-node communication

Cloud providers must build entirely new architectures to keep up.

2. Real-Time AI & Inference at Scale

Generative AI is moving toward:

- Streaming responses

- Instant multimodal output

- AI agents acting autonomously

- Live data-driven decision-making

This requires:

- GPUs optimized for inference

- Edge-AI servers

- Smaller, deployable models

- Ultra-fast memory

3. Massive Data Ingestion & Processing

AI relies on data lots of it.

Cloud providers are building:

- AI data pipelines

- High-throughput storage layers

- Data-cleaning and labeling services

- Vector databases and retrieval systems

- AI-native analytics engines

The goal: support real-time, automated, AI-driven insights for every industry.

How AWS and Nvidia Are Building the Future of AI Infrastructure

AWS is becoming the “AI factory” for the world

AWS is investing in:

- Global GPU superclusters

- Custom AI chips (Trainium 2, Inferentia 3)

- Nvidia-powered AI servers

- AI-centric data-center expansions

- AI cloud services in Amazon SageMaker

- Fully managed inference endpoints

Nvidia is supplying the world’s AI engines

Nvidia’s roadmap is focused on:

- Blackwell GPUs

- Grace Hopper superchips

- Enterprise AI frameworks

- AI networking (NVLink, InfiniBand)

- AI-accelerated design tools

- Cloud-native GPU virtualization

Nvidia isn’t just selling chips, it’s building the global AI engine room.

What This Means for the Future of Cloud Computing

We are entering the AI-native cloud era, defined by:

1. Cloud architecture built around AI as the default

Not supplemental primary.

2. Hardware-software co-design becoming essential

AI requires deep integration between silicon and software frameworks.

3. Massive growth in AI-driven cloud spending

Analysts expect trillions of dollars in AI-related infrastructure investments over the next decade.

4. Entire industries shifting workloads to AI-powered cloud platforms

Including:

- Healthcare

- Automotive

- Manufacturing

- Commerce

- Security

- Media

5. A new global hierarchy of tech companies

Those who lead in AI infrastructure will dominate the technological landscape.

The Next Era of Computing Has Already Begun

The aggressive push by AWS, Nvidia, and other cloud giants signals a major technological turning point.

We are shifting from:

Traditional cloud computing

to

AI-first, hardware-intensive, globally distributed supercomputing

This new era will shape:

- How companies build products

- How nations compete

- How data moves

- How AI models evolve

- How the global economy operates

One thing is clear:

Cloud and AI infrastructure providers are not just preparing for the future they are building it.

Leave a comment