In a move that underscores the accelerating arms race in artificial intelligence infrastructure, Amazon Web Services (AWS) has announced the rollout of its next-generation AI servers, built using Nvidia’s advanced technologies. According to Reuters, the initiative reflects Amazon’s commitment to powering ever-larger AI models, increasing data center efficiency, and enhancing cloud performance for global enterprises.

This development is more than just a hardware upgrade, it’s a strategic realignment of AWS’s compute ecosystem to meet the explosive demand for AI training, inference, and high-performance analytics. As organizations adopt generative AI at unprecedented speed, cloud providers are under pressure to deliver infrastructure that is faster, more scalable, and more cost-efficient. AWS’s partnership with Nvidia aims to solve exactly that.

The Rise of AI-Driven Cloud Infrastructure

Over the past few years, AI workloads have shifted dramatically from experimental projects to mission-critical operations. Startups, Fortune 500 companies, government agencies, and research institutions are all deploying foundation models, LLMs, multimodal pipelines, and predictive AI systems.

The result?

A massive surge in demand for specialized AI compute.

Traditional servers are no longer capable of handling the scale required for:

- Training trillion-parameter models

- Rapid real-time inference

- Simulation-heavy tasks

- Autonomous systems

- Generative and multimodal AI workloads

AWS recognized this shift early, introducing custom chips like Trainium and Inferentia. But with Nvidia continuing to dominate high-end AI compute, AWS is now aligning its infrastructure more tightly with the world’s most widely adopted AI hardware ecosystem.

Nvidia Technologies Powering the New AWS AI Servers

While AWS has not disclosed every technical detail, the new AI server fleet is expected to integrate core Nvidia technologies such as:

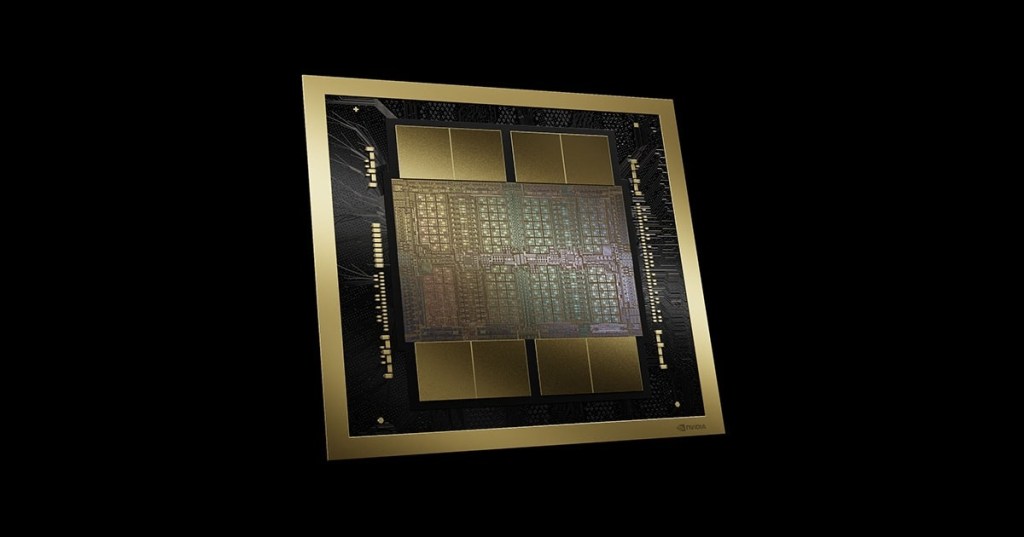

1. The Latest Nvidia GPUs (H200, Blackwell architecture, or next-gen accelerators)

These GPUs offer massive improvements in:

- Tensor processing throughput

- Memory bandwidth

- Energy efficiency

- Multi-GPU scaling

They enable faster training of generative AI models, computer vision systems, and scientific simulations.

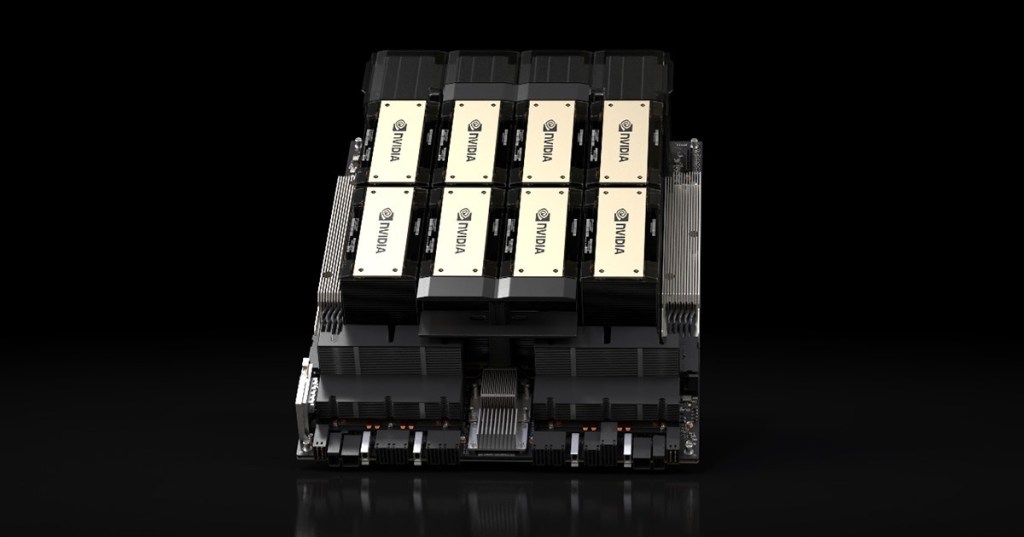

2. Nvidia NVLink & Networking Technologies

AWS data centers will gain the ability to connect thousands of GPUs with low latency and high-speed interlinks. This is especially crucial for:

- Distributed training

- Multi-node inference

- Model parallel workflows

3. Nvidia AI Enterprise Software Stack

This includes:

- CUDA

- TensorRT

- cuDNN

- Nemo for LLMs

- Nvidia Inference Microservices

By offering these tools natively through AWS, developers can get production-ready AI pipelines running faster than ever.

Why AWS Is Making This Move Now

The timing of Amazon’s expanded Nvidia partnership is no coincidence.

1. AI Demand Is Outpacing Data Center Capacity

Cloud providers worldwide are scrambling to scale up.

AWS cannot afford bottlenecks especially with Microsoft and Google aggressively investing in their own AI cloud stacks.

2. Enterprise AI Adoption Is Exploding

Banks, hospitals, logistics networks, and entertainment companies are deploying:

- AI copilots

- Automated customer service

- Predictive analytics

- Computer-generated content

These require immense compute power.

3. Reinforcing AWS’s Leadership in Cloud Computing

AWS still holds the largest share of the cloud market, but competition is rising fast. Offering the world’s fastest AI servers helps Amazon differentiate itself and retain AI-heavy customers.

How These New AI Servers Transform the Cloud Experience

1. Faster Model Training for Enterprises

Businesses that once took weeks to train models can now complete them in days or even hours. This reduces:

- Development costs

- Time-to-market

- Infrastructure waste

2. Better Performance for Generative AI

With Nvidia’s top-tier GPUs behind the scenes, AWS users will experience:

- Faster text generation

- More accurate image models

- Smoother deployment of large-scale LLMs

3. Cost Optimization at Scale

Amazon aims to reduce the cost per compute unit for AI tasks. This allows startups and large enterprises alike to build ambitious AI systems without exceeding budget constraints.

4. Seamless Integration with AWS Services

The new AI servers will plug into:

- Amazon SageMaker

- AWS EC2 GPU instances

- Managed inference endpoints

- AI-specific developer tools

This means minimal friction for developers migrating workloads.

A Strategic Partnership with Long-Term Implications

Amazon and Nvidia have a long history of collaboration, but this rollout marks a deeper level of commitment. Here’s why it matters:

1. The Cloud Wars Are Now AI Wars

Every cloud provider is competing to host the next OpenAI, Anthropic, or groundbreaking enterprise AI model. Having the highest-performing GPU clusters is a major competitive edge.

2. Accelerated Global AI Development

AWS operates the world’s largest cloud infrastructure footprint. Integrating Nvidia AI systems allows AI development at global scale, benefiting:

- Research labs

- Startups

- Industries undergoing digital transformation

3. Strengthening the AI Supply Chain

As chip shortages persist and demand rises, Amazon’s alignment with Nvidia ensures:

- Access to next-gen chips

- Priority allocation for data center deployments

- Stable, long-term growth in AI compute availability

The Future of AWS and Nvidia in AI

Looking ahead, several possibilities emerge:

1. Hyperscale AI Clusters for Public Use

AWS could build massive, multi-thousand GPU clusters for rent, ideal for training frontier models.

2. AI Cloud-as-a-Service

Enterprises may soon access turnkey AI systems optimized end-to-end by AWS and Nvidia.

3. Custom Silicon + Nvidia Hybrid Architectures

AWS may combine Trainium with Nvidia GPUs in hybrid clusters, offering a best-of-both-worlds solution.

4. More Regions Getting AI Supercompute

Expect US, Europe, India, and APAC AWS regions to receive AI-focused server upgrades.

Conclusion: A New Era of AI Infrastructure Begins

Amazon’s rollout of Nvidia-powered AI servers isn’t just a technical upgrade it’s a strategic evolution of the global AI ecosystem. With demand for generative AI and machine learning accelerating, hyperscalers must reinvent the physical backbone of the internet.

By partnering with Nvidia at scale, AWS is positioning itself to power the next generation of AI breakthroughs across every industry.

The future of AI will be shaped by companies with the strongest infrastructure and Amazon just signaled that it intends to be one of them.

Leave a comment