Artificial Intelligence isn’t just transforming software, it’s reshaping the physical backbone of the digital world. Data centers, cloud systems, and global infrastructure are undergoing their biggest evolution in decades.

According to new industry insights reported by DQ, demand for AI-optimized data centers is skyrocketing. As companies fight for access to high-performance AI chips, they are investing heavily in advanced cooling, energy-efficient server farms, and next-generation hardware designed specifically for massive AI workloads.

At the same time, a major architectural shift is happening: hybrid cloud + edge computing is becoming the default strategy for enterprises. As Mindsight highlights, companies now need both centralized cloud power and distributed edge performance to keep up with modern real-time applications from IoT to automation to analytics.

We’re entering an era where digital infrastructure matters more than ever.

AI Is Reshaping Data Centers Worldwide

AI models continue to grow in size, complexity, and compute demands. Whether it’s training foundation models or running fast inference at scale, organizations need systems that can handle petabytes of data and extreme power consumption.

This surge has created three major shifts:

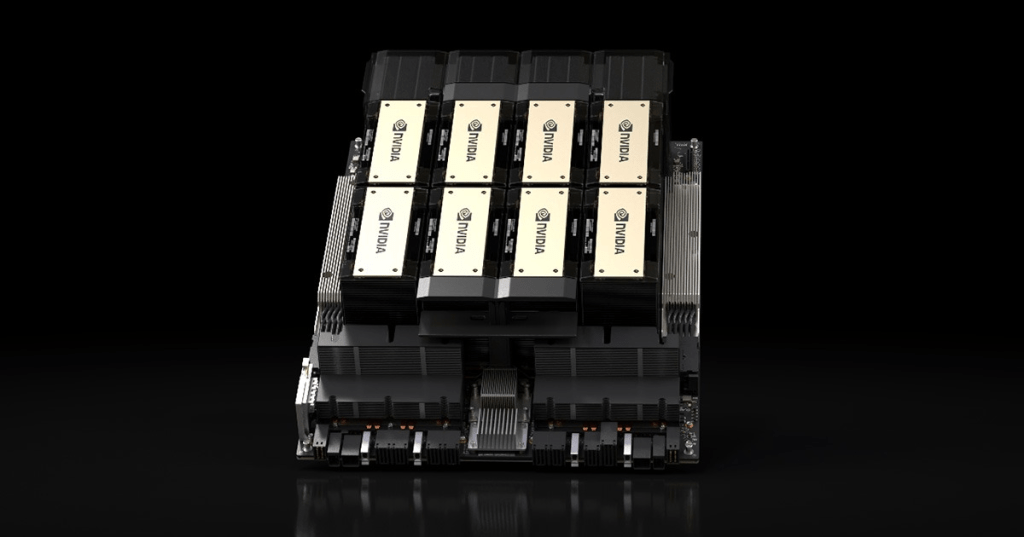

1. Competition for AI Chips Is Intensifying

AI chips from NVIDIA GPUs to custom accelerators are now among the most valuable resources in tech.

- Demand far exceeds supply

- Companies reserve chips months in advance

- Cloud providers are building custom AI hardware

- Nations are investing in semiconductor plants

Businesses that lag behind in access to compute risk falling behind in AI capability.

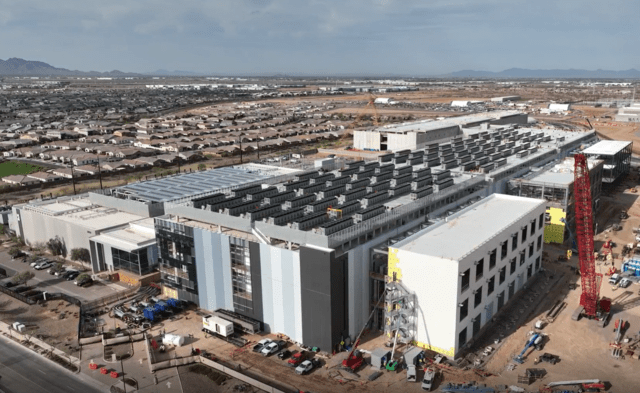

2. The Rise of AI-Optimized Data Centers

Traditional data centers weren’t built for today’s intense AI workloads. To handle large-scale model training and inference, companies are:

- Increasing rack density

- Deploying GPU clusters

- Adding high-bandwidth networking

- Upgrading power delivery and cooling systems

The result? A new category: AI-first data centers.

Why this shift matters

AI workloads generate far more heat and power demand than typical cloud computing tasks. This requires redesigned infrastructure that can support:

- Massive parallel compute

- High-speed interconnects

- Low-latency throughput

- Efficient cooling at scale

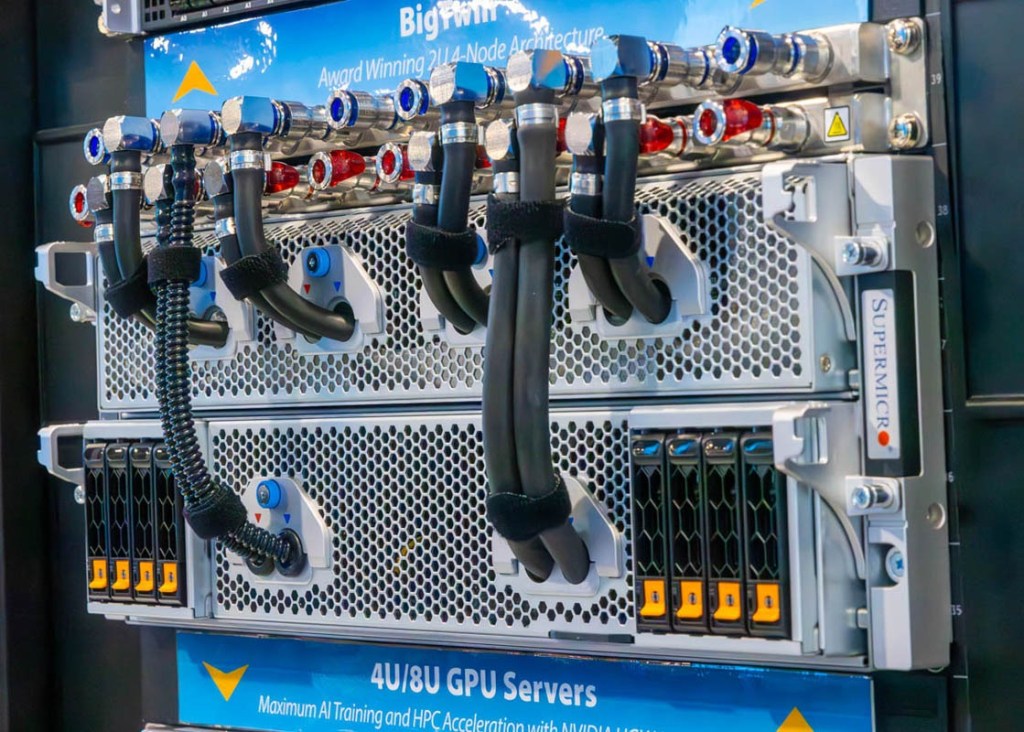

3. Liquid Cooling and Advanced Thermal Solutions

6

As heat output skyrockets, companies are replacing old air-cooling systems with:

- Liquid cooling loops

- Immersion cooling tanks

- Direct-to-chip cooling solutions

- Energy-efficient thermal designs

These methods can manage the extreme thermal loads required by high-density GPU clusters.

Liquid cooling isn’t just an upgrade, it’s becoming a necessity for next-gen data centers.

Hybrid Cloud + Edge Computing: The New Enterprise Standard

While massive cloud systems remain the backbone of AI, businesses increasingly need real-time processing, something cloud alone cannot provide due to latency limitations.

This is why hybrid cloud + edge has emerged as the go-to model.

Why Hybrid Cloud?

Hybrid cloud combines:

- Public cloud, scalable compute, storage, AI tools

- Private cloud, control, compliance, security

- On-prem systems, specialized workloads

This gives companies flexibility while managing data governance.

Why Edge Computing?

Edge computing means processing data close to where it’s generated, such as:

- IoT devices

- Sensors

- Vehicles

- Industrial machines

- Retail devices

- Smart-city networks

Key benefits:

- Ultra-low latency

- Faster decision-making

- Reduced cloud traffic

- Real-time analytics

- Higher reliability

Edge + Cloud Together: The Optimal Architecture

For applications that demand real-time responsiveness, such as:

- Autonomous vehicles

- Industrial automation

- Smart manufacturing

- Healthcare monitoring

- Robotics

- AR/VR systems

- Smart agriculture

…companies combine edge processing with cloud capability.

This blended ecosystem allows:

- Instant local decisions

- Heavy cloud AI processing

- Continuous synchronization

- Scalable data flow

Hybrid + edge is no longer optional. It’s the default architecture for modern enterprises.

Global Infrastructure Investments Are Surging

To support AI, cloud, and edge workloads, governments and major companies are investing billions into next-gen digital infrastructure:

New hyperscale data centers

Semiconductor manufacturing plants

Low-latency fiber networks

Renewable energy for data center power

Edge computing hubs

AI-specific cloud clusters

Tech giants like Google, Microsoft, AWS, Meta, Oracle, Tencent, and Alibaba are racing to expand global capacity.

Countries are also treating digital infrastructure as strategic assets, similar to energy grids, roads, and industrial networks.

The Future: A Distributed, AI-Optimized Digital World

We are living through a massive transformation in how digital infrastructure is built and scaled.

The next decade will be defined by:

- AI-first data centers

- Liquid cooling as the norm

- Explosive chip demand

- Hybrid cloud ecosystems

- Distributed edge networks

- Real-time AI workloads

- Global infrastructure races

AI isn’t just revolutionizing software, it’s forcing the entire world to redesign the physical foundation of the internet.

The companies that adapt the fastest will lead the next generation of digital innovation.

Leave a comment