AWS has long been a leader in cloud computing, offering flexible, powerful infrastructure to individuals, startups, enterprises, and large organizations. On the other hand, NVIDIA has been a powerhouse in hardware for AI, GPUs, specialized AI chips, and software tools. Recently, the two companies have significantly deepened their collaboration, signaling a major shift in how the global AI backbone is being built and deployed.

At the heart of this collaboration is a move by AWS to integrate NVIDIA’s advanced AI technology directly into its infrastructure. The result: forthcoming AWS AI servers that will combine AWS’s cloud scale, networking, storage, and orchestration, with NVIDIA’s high-end AI hardware and software stack.

What does this mean? For developers, enterprises, and governments, faster, more efficient, and scalable AI compute power, accessible via cloud or even on-premises deployments. For AI models, it means reduced training time, improved efficiency, and the capacity to handle larger, more complex workloads.

What’s New: Trains, Chips, Factories & Fusion

1. AI Factories: On-Prem & Cloud Flexibility

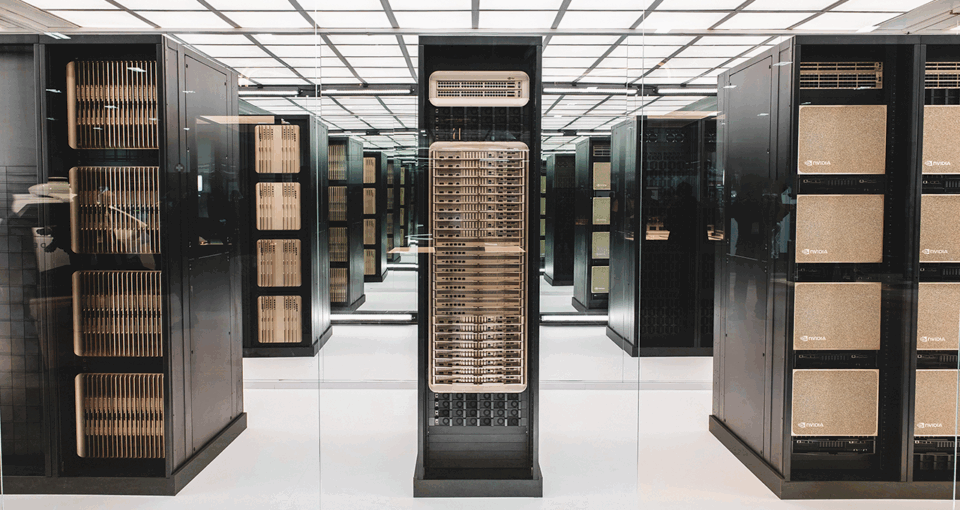

AWS is launching what they call “AI Factories.” These are full-stack AI deployments hardware + software + management, built in collaboration with NVIDIA. For clients (enterprises, governments, large organizations) who need control over data (e.g. due to privacy, sovereignty, or regulatory demands), this means they can host NVIDIA-powered AI infrastructure in their own datacenter, but managed by AWS.

Essentially: you get supercomputer-grade AI infrastructure without having to build and maintain it yourself, ideal for companies working with highly sensitive data, or operating under strict compliance regimes.

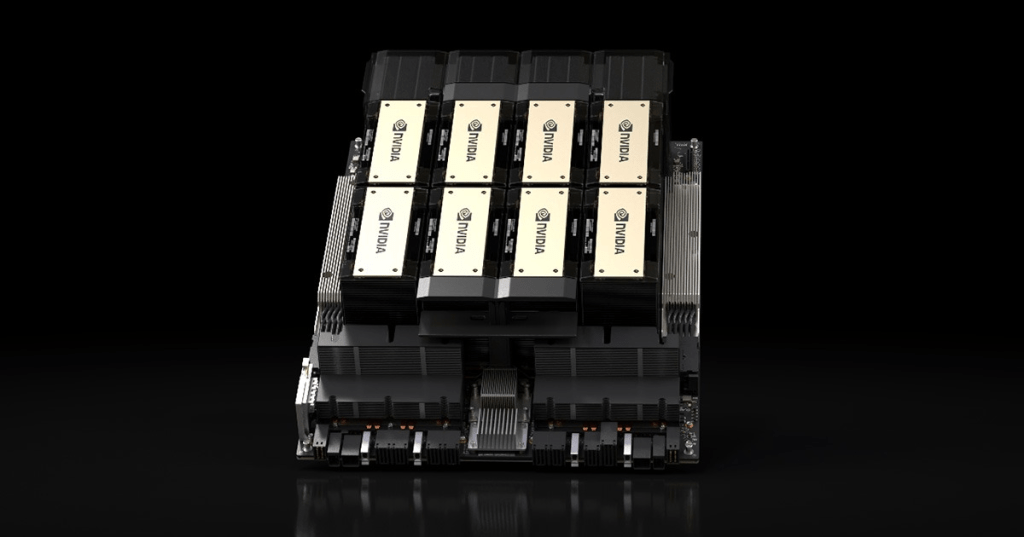

2. Next-Gen Chips + NVLink Fusion Interconnect

AWS has announced that its future custom AI chips (e.g. upcoming “Trainium4”) will adopt NVIDIA’s NVLink Fusion, a high-speed interconnect technology that enables tight integration between multiple chips/GPUs, allowing for high-bandwidth communication and effective scaling for large AI tasks.

This matters deeply, training large AI models often requires many chips working in sync. With NVLink and optimized infrastructure, AWS + NVIDIA together can deliver performance and efficiency often reserved for dedicated AI labs or supercomputers.

3. Broad Support for GPU-Accelerated Cloud Instances

The collaboration also reinforces AWS’s existing GPU-powered instance offerings: EC2 instances with various NVIDIA GPUs (for different workloads, training, inference, graphics, HPC) are widely used today, and this partnership strengthens that base.

This also signals that AWS sees GPU-accelerated AI as a core pillar of its cloud strategy — and with NVIDIA’s latest tech baked in, that pillar just got stronger.

4. End-to-End AI Stack: Hardware, Software, Services

Beyond hardware, the AWS–NVIDIA tie-up aims to co-engineer the full AI stack: compute, interconnect, storage, orchestration, model-training frameworks, delivering a turnkey solution to enterprises. This reduces complexity for organizations who want to deploy AI at scale but lack deep hardware or infrastructure expertise.

In practice, this lowers the barrier for companies to start large-scale AI adoption: less setup friction, more reliability, and faster time-to-value.

Why This Matters For AI, Business & the Global Cloud Landscape

1. Democratizing High-End AI Infrastructure

Traditionally, training very large AI models or running heavy workloads demanded vast capital, building racks of GPUs, skilled engineers, cooling, networking, etc. With AWS + NVIDIA’s collaboration, even smaller companies or organizations in emerging markets (like India and beyond) could access cutting-edge AI infrastructure on-demand, without major upfront investment.

This democratization could accelerate AI adoption globally, more startups, developers, institutions tapping into powerful compute. It could fuel innovation, and make advanced AI tools accessible beyond wealthy tech hubs.

2. Enabling Enterprise-Grade AI Deployments (Scale + Compliance)

Enterprises needing high performance, whether for large language models (LLMs), generative AI, data analytics, simulation, or HPC workloads, will benefit. And for sectors with strict compliance, data-sovereignty, and security needs (e.g. healthcare, finance, government), AWS’s AI Factories provide a compelling option: local infrastructure, managed operations, and full-stack AI services, while preserving control over data.

This could accelerate enterprise AI adoption globally, not just in the U.S. or developed countries, but also in regions currently lagging due to infrastructure or compliance barriers.

3. Driving Next-Generation AI Applications Faster

With improved infrastructure, companies and researchers can build bigger, more ambitious AI models, more powerful LLMs, more sophisticated generative AI, advanced multi-modal models (text, image, video, audio), real-time AI services, and high-performance inference.

This means faster innovation cycles, potentially leading to breakthroughs in natural language processing, computer vision, robotics, scientific computing, and deployment of products that today seem futuristic.

4. Strategic Shift: Cloud Providers as AI-Infrastructure Providers

This deep tie underscores a fundamental shift: cloud providers aren’t just hosting servers anymore, they’re becoming AI infrastructure platforms. With hardware + software + managed services + global availability, AWS + NVIDIA could function like a “supercomputer as a service”, accessible worldwide, on-demand.

That could disrupt traditional data centre providers, shift how companies procure compute, and centralize much AI infrastructure under major cloud platforms.

What to Watch Out For Challenges & Risks

- Cost & Access Inequality: Even though cloud AI is more accessible than building hardware in-house, it still costs, heavy AI workloads may be expensive, potentially limiting access to deep-pocketed companies or well-funded startups.

- Dependence on Cloud Providers: Organizations may become reliant on mega-cloud providers (with lock-in risk, pricing changes, data-governance constraints).

- Centralization vs Decentralization Trade-off: Centralizing AI infrastructure under large providers concentrates power, potentially limiting diversity in AI infrastructure ownership, raising concerns around control, vendor lock-in, and access inequality.

- Regulatory & Data-Sovereignty Considerations: In some regions, regulatory/compliance requirements or data-sovereignty laws may restrict cloud-based AI deployment despite the “on-premises” option.

What This Means for You, Developer, Business, or Observer

If you are a startup founder or developer, this opens up powerful opportunities: you can prototype, train, and deploy AI models on world-class infrastructure without big upfront capital.

If you run a business or enterprise, especially in regulated domains (healthcare, finance, government), AWS + NVIDIA’s AI infrastructure gives you a path to adopt AI at scale, with performance, security, and compliance, without building everything from scratch.

If you are an AI researcher or enthusiast, this intensifies the global AI arms race: more compute, more capacity, meaning more ambitious AI experiments, faster prototyping, potentially more breakthroughs.

What the New Infrastructure Looks Like

- Massive datacenter racks filled with GPUs and specialized AI chips built for high-throughput computing.

- “AI Factories”, on-premises or cloud-hosted setups combining compute, storage, networking, and AI-software stack.

- Cloud dashboards and orchestration tools managing distributed GPU clusters with simplified deployment pipelines.

These setups represent a leap beyond traditional cloud virtual machines, they are the backbone for next-gen AI workloads worldwide.

What’s Next: Predicted Trends

- More cloud providers will likely follow suit, offering end-to-end AI infrastructure bundled with management, compliance, and global availability.

- Growing adoption of AI across non-tech industries: healthcare, manufacturing, logistics, govt, education, aided by easier access to powerful infrastructure.

- Emergence of global AI supply-chains: cloud + GPU + regulatory-compliance + data-governance solutions, bundling hardware and software services.

- Increased competition, innovation, and specialization in AI infrastructure, e.g. more custom chips, optimized stack, hybrid cloud + on-premises deployments.

The deepening alliance between AWS and NVIDIA marks a watershed moment for the AI-cloud world. By combining AWS’s global cloud scale, orchestration, and footprint, with NVIDIA’s AI-optimized hardware and software, we are entering an era where powerful AI infrastructure is no longer the domain of a few elite labs, but becomes widely accessible.

This change has the potential to democratize AI, accelerate enterprise adoption, enable ambitious AI projects worldwide, and reshape the global technology landscape.

If you’re interested, I can also pull up 8–12 high-resolution images showing AWS–NVIDIA infrastructure (data centers, GPU racks, AI factories), these are great if you plan to embed this blog into a website or medium post.

Leave a comment