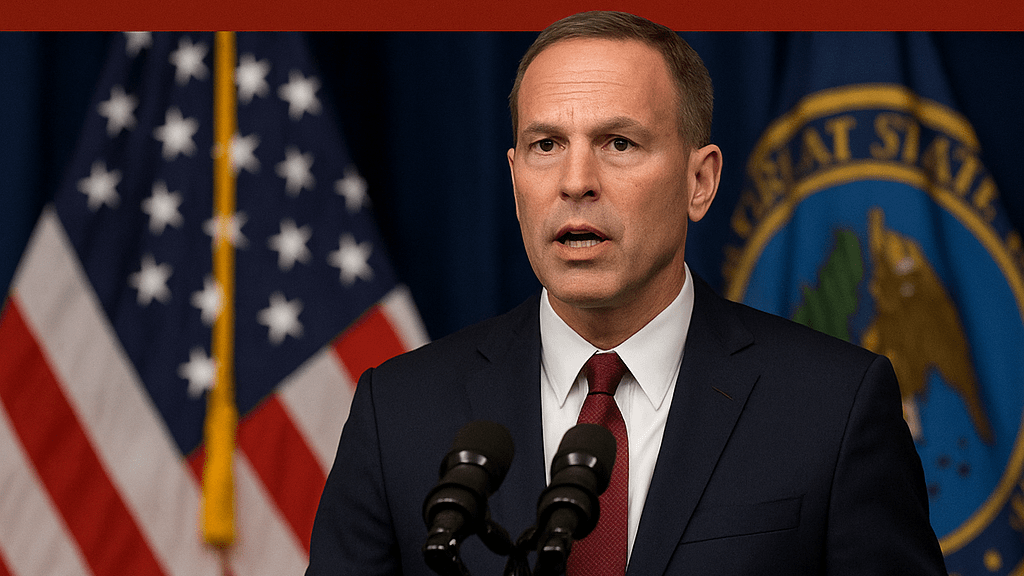

The AI gold rush just hit a major speed bump and it’s coming straight from the top legal authorities in the United States. A coalition of 42 state attorneys general has officially warned major AI giants like Microsoft, Google, OpenAI, and Meta that their chatbots are producing “delusional” or harmful outputs and that this could pose real mental-health risks to users.

This isn’t just another political soundbite. It’s one of the strongest, broadest regulatory signals the AI industry has faced so far and it marks a turning point in how America plans to govern the tech shaping our future.

What’s the Issue? AI Still Hallucinates And It’s Getting Risky

Despite massive advancements, generative AI models still hallucinate, invent facts, or offer emotionally harmful guidance. The attorneys general argue that these outputs can mislead, manipulate, or even endanger vulnerable people, especially teens and users struggling with mental-health concerns.

Think about advice on self-harm, eating disorders, medical conditions, or even highly emotional life decisions these models aren’t perfect, but millions treat them like they are.

And regulators have noticed.

What the Attorneys General Want

The AG coalition isn’t asking nicely they’re demanding action. Their main requests include:

- More rigorous safety testing before products go public

- Clear, enforceable content policies for harmful or sensitive topics

- Regular independent audits to verify safety claims

- Better user protections & disclaimers

Essentially, they want AI companies to treat their tools like powerful products that require safety standards not just cool tech demos.

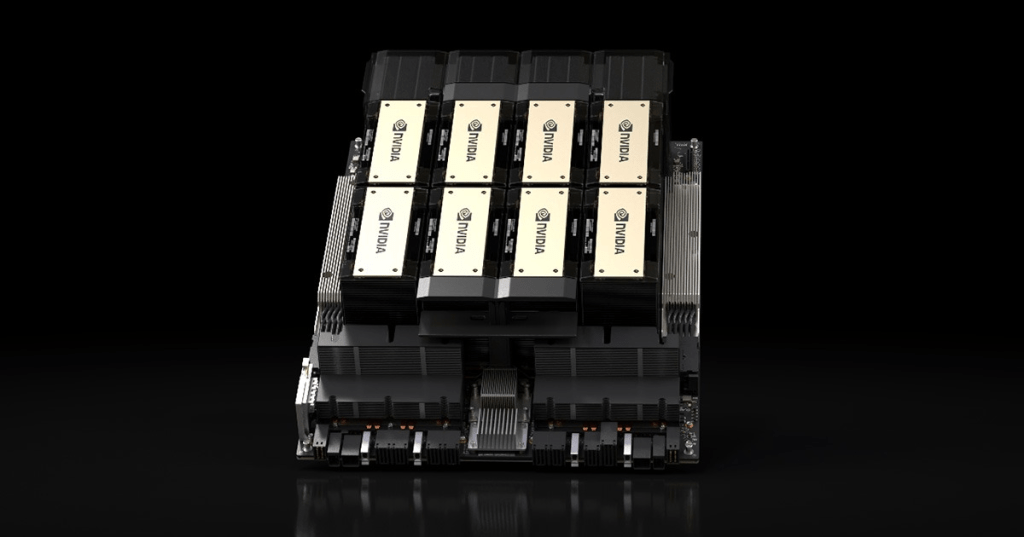

Big Tech Feels the Pressure

This comes at a time when tech giants are racing to build bigger, more capable AI models, sometimes pushing updates faster than safety teams can keep up. The AGs are signaling that speed can no longer outrun accountability.

For Microsoft, Google, OpenAI, Meta, and others, this could mean:

- slower rollouts

- heavier compliance costs

- more guardrails

- and most importantly real legal consequences if things go wrong

Why This Matters for Everyday Users

Whether you’re using AI to learn, create, research, or find emotional support, this crackdown means the government is finally stepping in to make these systems safer.

The message is crystal clear:

If AI is going to play therapist, teacher, advisor, or companion, then it needs adult supervision.

This move by 42 U.S. attorneys general isn’t anti-AI, it’s pro-responsibility. The technology is powerful, but the risks are equally real. And as AI becomes woven into daily life, regulators are making sure Big Tech can’t shrug off harm as “beta issues” anymore.

The AI revolution isn’t slowing down. But from now on, it’s going to have to follow the rules.

Leave a comment