Artificial intelligence may be moving fast, but U.S. lawmakers are now running just as hard to catch up. Across Washington and state capitals, pressure is mounting on major technology companies including OpenAI, Google, Meta, and Microsoft to rein in AI systems that critics say can produce harmful, misleading, or emotionally dangerous outputs.

What once felt like a future concern has officially become a present-day political issue.

Why the Alarm Bells Are Ringing

At the center of the debate is a growing fear that AI chatbots and generative tools are being deployed faster than safety frameworks can handle. State attorneys general and federal lawmakers have raised concerns over AI systems giving false medical advice, reinforcing harmful narratives, spreading misinformation, or responding irresponsibly to users in emotional distress.

Unlike traditional software, AI doesn’t just execute commands, it converses, persuades, and influences. That shift has lawmakers worried about real-world consequences, especially when millions of Americans rely on these tools daily for information, work, and decision-making.

What Lawmakers Are Demanding

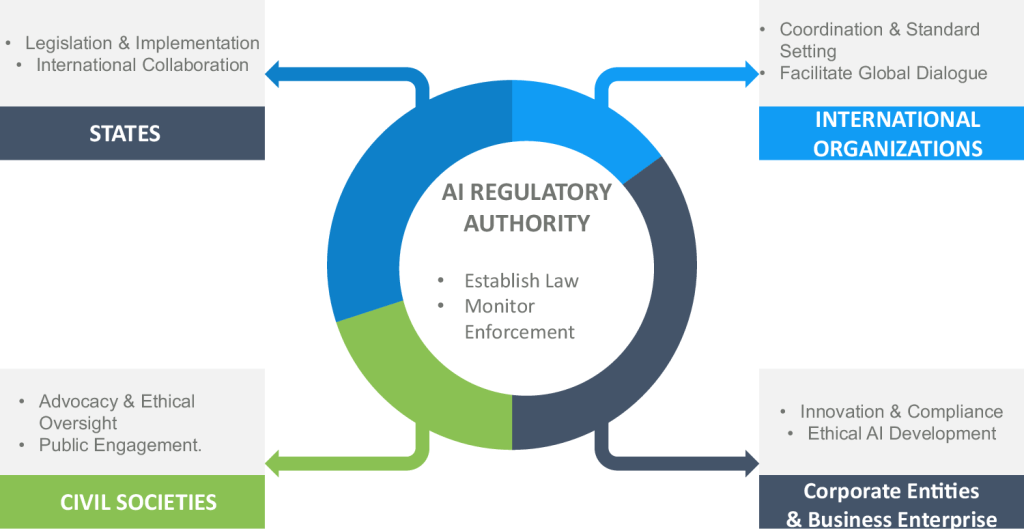

The current push isn’t about banning AI, it’s about accountability and guardrails. Regulators are calling for:

- Stronger safety testing before public releases

- Clearer disclosure when users are interacting with AI

- Independent audits to evaluate risks and bias

- Limits on AI outputs that could cause psychological or societal harm

Several state attorneys general have gone further, warning that companies could face legal consequences if AI systems are found to cause measurable harm due to negligence or lack of oversight.

Big Tech’s Response: Cooperation or Caution?

Major AI developers say they take safety seriously, pointing to internal testing teams, content filters, and user-reporting mechanisms. Companies like OpenAI and Google emphasize that AI models are improving rapidly and that perfect accuracy is unrealistic at this stage of innovation.

Still, critics argue that self-regulation isn’t enough when profit incentives push companies to release products quickly. The concern is that voluntary safeguards may fall short without enforceable standards especially as competition in the AI space intensifies.

Why This Moment Matters

This regulatory moment could define how AI evolves in America for the next decade. Too little oversight risks public harm and loss of trust. Too much regulation could slow innovation and push development overseas.

Lawmakers are now walking a tightrope: encouraging technological leadership while ensuring AI systems don’t become unchecked influencers in people’s lives.

AI regulation is no longer theoretical, it’s political, legal, and cultural. As generative AI becomes embedded in schools, offices, healthcare, and entertainment, the question is no longer if it should be regulated, but how fast and how firmly.

One thing is clear: the era of “move fast and break things” is colliding with the reality of AI’s power. And in that collision, the rules of the digital age are being rewritten in real time.

Leave a comment